How Can I Do Web Scrapping To Detect Price Changes On Amazon

Write a Elementary Amazon Toll Scraper With Node.js, Scrape-information technology, and SQLite

Build an constructive spider web scraper

Say that you're managing a store, or that yous are merely monitoring a list of items from Amazon then y'all tin can get notified every time the price changes, this quick tutorial is exactly for you.

Writing a simple but constructive scraper with a bunch of line of codes today is extremely easy, and we're hither to show it.

Let'south beginning!

Kickstart

We are going to use NodeJS and scrape-it to write a uncomplicated script able to fetch Amazon prices and SQLite to store items and their prices. Nosotros're using SQLite because of its simplicity and considering, fundamentally, it's merely a plain file sitting on the disk requiring no configuration whatsoever! And.. you can easily re-create it around or export data as CSV.

Before starting: make sure you have NodeJS and Yarn (NodeJS bundle manager) installed on your organisation.

1. Set up the Environment

Let'southward create a folder property our project and install our dependencies:

yarn init

yarn add scrape-it sqlite3 Too, permit'due south install sqlite3 in our system in order to set up our database. For Linux / Mac:

sudo apt install sqlite3 // Ubuntu

sudo pacman -S sqlite3 // Curvation Linux

brew install sqlite // MacOS If you're on Windows, follow this guide.

two. Create our Database

Permit's create a binder keeping the database itself, its schema, and a seeder to shop some dummy items for testing.

mkdir db

impact db/schema.sql

touch db/seed.sql Nosotros at present edit schema.sql to define our db schema:

CREATE Tabular array items (

id INTEGER PRIMARY Key AUTOINCREMENT,

name TEXT Not NULL,

asin TEXT Non NULL,

price INTEGER,

created_at TIMESTAMP Not NULL DEFAULT CURRENT_TIMESTAMP

); In my instance, I want to scrape Amazon in club to monitor guitar pedals prices, so I will seed the database with some dummy items (y'all can use whatever y'all want):

INSERT INTO items (name, asin)

VALUES

('Boss BD-ii', 'B0002CZV6E'),

('Ibanez TS-808', 'B000T4SI1K'),

('TC Flashback', 'JB02HXMRST4R') The asin is basically the Amazon production id, you can easily go information technology from an Amazon product url:

https://www.amazon.com/TC-Electronic-Flashback-Filibuster-Effects/dp/B06Y42MJ4N Now, let's create the database using sqlite3 parcel:

cd db

sqlite3 database.sqlite < schema.sql

sqlite3 database.sqlite < seed.sql And that's it! We now take some sample products to play with :)

3. The Scraper

Now, create a file index.js in the root of your projection, fire upwards your favorite text editor and let's put some code in information technology!

The scraper will perform the following steps:

- Retrieve the list of items from our database

- For every item scrape the relative Amazon folio (using the asin of each product)

- Update the item price on the database

First, nosotros declare dependencies and instantiate our database connector:

const scrapeIt = require('scrape-it')

const sqlite3 = require('sqlite3').verbose()

const db = new sqlite3.Database('./db/database.sqlite') We don't want to send too many requests to Amazon in a brusk period of time (permit's be polite), then allow's add a waiting function which nosotros'll employ after every scrape action:

const wait = (time) => new Hope(resolve => setTimeout(resolve, time)) Here we have our scrape function:

// scrape Amazon by asin (production id)

const scrapeAmazon = (asin) => {

const url = `https://amazon.com/dp/${asin}`

console.log('Scraping URL', url)

return scrapeIt(url, {

price: {

selector: '#price_inside_buybox',

catechumen: p => Math.round(parseInt(p.dissever('$')[ane])) * 100

}

})

} It simply accepts the asin and performs the scrape-information technology function to collect the information we need from the page.

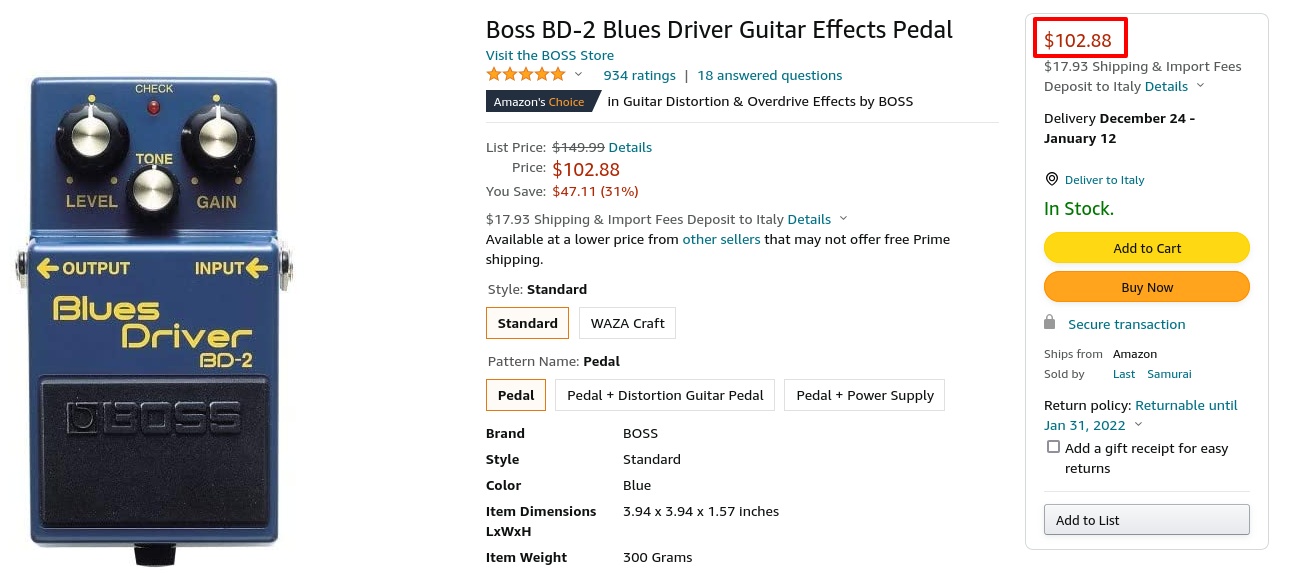

Specifically, we desire to scrape the price appearing on the right column of an Amazon production page (red box):

The process is very uncomplicated:

- Become to the Amazon production folio URL on your browser

- Open up the Development Tools

- Pick the HTML toll element

- Get whatever selector / id / grade nosotros might need to identify that chemical element

In our example, the id will be just enough ( #price_inside_busybox ).

Proceed in mind that yous might need to cheque if Amazon changes the element id from time to time. There are better solutions to this (such equally looking for the dollar sign "$" in the page), merely let'south keep it uncomplicated for now.

Nosotros also specify a convert role to parse the price as an Integer (to avoid storing floats on the database).

And this is it! At this point, scrape-it will practice the residue outputting all the elements it finds (in our case, just one) under an object called price :)

Besides, we return a scrape-it Promise and so that we can conveniently use async / expect.

In order to update the toll on our database nosotros implement the following function:

// update pedal toll

const updatePrice = (asin, price) => {

panel.log('Updating particular:', asin, cost)

db.run(`

UPDATE items Set up cost = '${cost}'

WHERE asin = '${asin}'

`, (err) => {

if (err) {

panel.log(err)

}

})

} Pretty like shooting fish in a barrel: we but use the asin to look up the entry we want to update, and perform an update on the database.

Finally, here's our chief office which goes through all the items in the database and calls the function nosotros simply prepared:

const scrape = async () => {

db.all('SELECT * FROM items', [], async (err, items) => {

panel.log(items)

for (const item of items) {

const scraped = wait scrapeAmazon(item.asin)

if (scraped.response.statusCode === 200 && scraped.data.cost) {

updatePrice(item.asin, scraped.data.price)

} else {

console.log('Out of stock')

}

expect wait(2000)

}

})

} scrape()

At present simply head to your final and run it with node index.js .

You can observe the code on my GitHub repo.

That'southward it!

Wrappin' Up

Either if you need a plain and simple web scraper or if you're planning to build the next-gen Cloud scraping platform scaling to millions of users, this petty guide should provide you the very bones building cake for anything you might desire to attain.

Really, this is exactly what I've used to find prices for my website rigfoot.com

As an exercise, you might attempt adding a new scraping function targeting a different website, such as eBay or Thomann!

I practice really promise that you found it useful, I would beloved to hear your comments and suggestions on features you'd like to see.

Thanks!

How Can I Do Web Scrapping To Detect Price Changes On Amazon,

Source: https://betterprogramming.pub/write-a-simple-amazon-price-scraper-with-node-scrape-it-and-sqlite-64e6a7667c91

Posted by: harrisfromment63.blogspot.com

0 Response to "How Can I Do Web Scrapping To Detect Price Changes On Amazon"

Post a Comment